Transformer

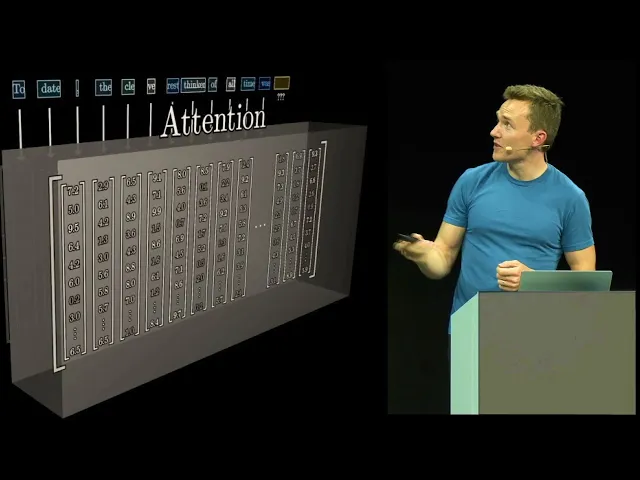

The key innovation behind transformers is something called “attention.” Unlike older models that read text one word at a time, transformers can look at all the words in a sentence at once and figure out how they relate to each other. This makes them much better at understanding context, meaning, and nuance.

Another big advantage: transformers are highly parallelizable. That means they can process lots of information at the same time, which makes them faster and more efficient to train. Thanks to this, researchers were able to build much larger, more capable models, and that’s what led to the leap from simple chatbots to advanced AI assistants.

Today, almost every major AI model, including ChatGPT, Claude, Gemini, and Mistral, is built using transformer architecture.

If you want to dig deeper, this explanation is a great starting point. And here’s a more technical and visual deep dive if you're up for it.

© 2025 Kumospace, Inc. d/b/a Fonzi®