Feb 4

Wed,

06:00 PM

SF AI Engineers: February w/ Tesla, Distributional, & Eventual Presenting

Three engineers. Three companies. One shared realization: we've been thinking about AI engineering all wrong.

This meetup got into the stuff that actually keeps engineers up at night. Not benchmarks, not demos, but the messy reality of building AI systems that work in production. Scott, YK, and Wassim each came in with a different angle, but they all landed in the same place.

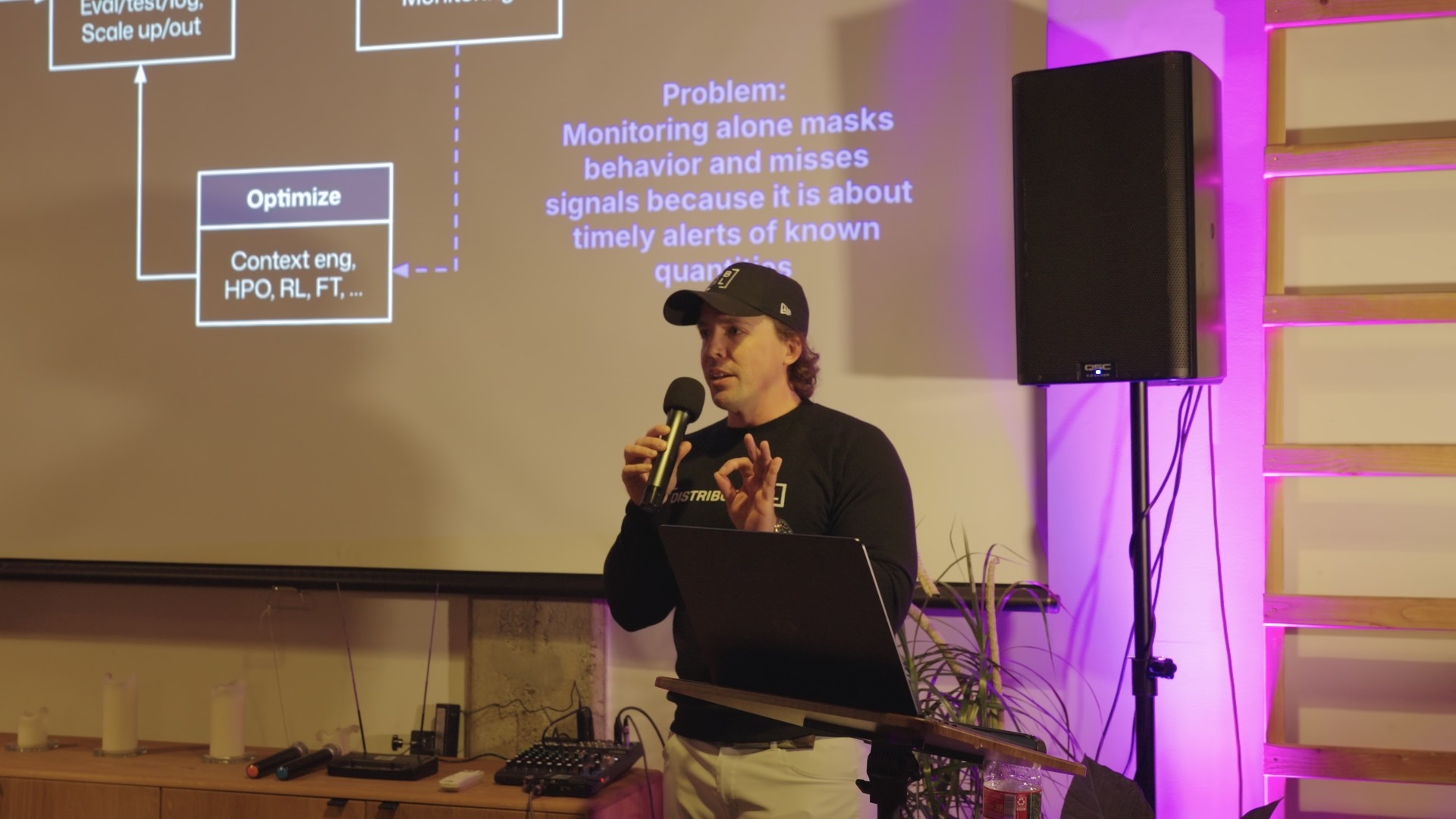

Scott Clark, Co-founder and CEO at Distributional

After 10 years building AI companies, Scott admitted he'd been solving the wrong problem. Everyone's been obsessed with improving model performance, but that's not what's blocking AI adoption. It's confidence. Nobody loses sleep over bumping evals by 0.5%. They lose sleep over not knowing what their AI is actually doing out in the wild.

His team started with traditional testing, hit a wall (Gen AI models are just too unpredictable for that), and pivoted to treating agents the way web analytics treats users by tracking their journeys and finding patterns you didn't know to look for. He says to stop trying to catch issues before production and start understanding what's happening in production.

YK, Engineering Team at Eventual

YK has been doing "agentic coding" for 2.5 years, back when most people thought AI coding meant pasting stuff from ChatGPT. He laid out four levels, from letting AI run wild (great for throwaway prototypes, a nightmare for anything you have to maintain) all the way to staff-engineer-level work where you understand every line the AI generates, not just vibe-check it.

Most engineers are stuck at Level 1 and can't figure out why their AI-generated code falls apart. His point: AI coding and traditional software engineering aren't opposites. The engineers who get this right treat them as complementary.

Wasim, Commercial UIs Team at Tesla Energy

Wasim's insight was deceptively simple: when engineers diagnose problems, they don't stare at spreadsheets. They graph things. They look for visual patterns. So he ran an experiment feeding LLMs screenshots of charts instead of raw time series data, and it worked. The AI picks up on the same visual cues a human would.

His bigger takeaway: building AI agents is less about coding, more about management. What tools are you giving it? What does it actually see? The answers to those questions matter way more than the model itself.

AI engineering is about being smarter about how you work with it. The systems and processes you build around the AI; that's where the real work is.

That's what we talk about every month at SF AI Engineers. Real engineers, real production systems, real lessons.

© 2025 Kumospace, Inc. d/b/a Fonzi®